Komodo-7B: The First LLM for Regional Languages in Indonesia

Motivation

The recent advancements in Large Language Models (LLMs) have mostly focused on languages like English with plenty of resources available. But there's still a big gap for languages that don't have enough resources published. While models like GPT-3.5, GPT-4 and Llama-2 perform well in various tasks, they are primarily evaluated in English and tend to struggle with languages other than English. On the other hand, there are multilingual models like Aya-101, Bactrian-X, Qwen-1.5, and Mixtral, which excel in tasks involving multiple languages. However, when it comes to individual languages or small regional languages with limited data, these models lack specialized expertise.

This performance gap underscores the need for focused attention and improvement in addressing the specific challenges posed by Indonesian and regional languages in the realm of language models. Currently, there is a notable absence of high-performing LLMs specifically designed for Indonesia, trained on Indonesian data, and evaluated against benchmarks for Indonesia’s regional languages.

Introducing Komodo-7B

In response to this, we are proud to introduce Komodo-7B, a Large Language Model with 7 billion parameters, designed to fill this gap. It can seamlessly work with Indonesian, English, and 11 other regional languages in Indonesia. Komodo-7B’s ability to understand different languages helps address educational disparities in Indonesia by offering direct translations from English to 11 regional languages of Indonesia. This is a big improvement over Google Translate which supports only 4 languages: Indonesian, English, Javanese, and Sundanese.

With Komodo-7B, businesses can now support customers from various parts of Indonesia without needing to hire agents who speak every regional language in Indonesia. For instance, if a customer speaks Lampungnese and the agent speaks Javanese, Komodo-7B can bridge the communication gap. It’s time to say goodbye to language barriers in customer service.

Besides translation, Komodo-7B is also capable of performing a wide range of tasks, including but not limited to intent classification, sentiment analysis, common sense reasoning, and any other use cases. In fact, it’s able to support any task that we want to focus on by fine-tuning the base model on that specific use case.

Developing Komodo-7B was not an easy task that involved using a huge amount of training data and extensive preprocessing to create a high-quality dataset. As the name suggests, Komodo-7B has 7 billion parameters and is trained on over 8.5 billion tokens. To put this into perspective, 8.5 billion tokens is equivalent to more than 20 gigabytes of data. The result is a groundbreaking model that performs exceptionally well in Indonesian and regional languages.

Komodo-7B itself is a family of LLMs that consists of Komodo-7B-Base and Komodo-7B-Instruct.

Komodo-7B-Base is a large language model that is developed through incremental pretraining and vocabulary expansion on top of Llama-2-7B-Base.

Komodo-7B-Instruct is the fine-tuned version of Komodo-7B-Base that has been trained to understand a wide range of instructions.

Training Data

The dataset employed in both the pre-training and fine-tuning phases of our language model was created not only from diverse open-source datasets but also from manually collected data. Additionally, we made use of freely available datasets primarily collected in Indonesian and other regional languages, such as Javanese, Sundanese, Acehnese, and many more. We aim to ensure that our language model is well-versed not only in Indonesian but also in other regional languages. This approach helps improve the model’s overall language skills and adaptability to various cultural contexts.

Vocabulary Expansion

As a reminder, tokenizer is a crucial component that breaks down input text into smaller units called tokens. These tokens are the basic building blocks that the model uses to understand and generate text. Recognizing the importance of linguistic diversity, we focused on enhancing our language model’s proficiency in both Indonesian and regional languages. To achieve this, we systematically expanded the tokenizer’s vocabulary by identifying and incorporating approximately 2,000 frequently used words specific to Indonesian and 1,000 words for Indonesia’s regional languages that were absent in the Llama-2 model.

The standard method for improving vocabulary in language models typically involves training a new tokenizer and merging it with the existing one. This approach has shown impressive results in projects like Chinese-LLaMA and Open-Hathi. The effectiveness of this strategy can be attributed to the significant linguistic differences between languages such as Chinese and Hindi compared to English. In contrast, the Indonesian and regional languages use the same Latin script as English, which presents a different set of challenges.

We tested the traditional method, as well as a new approach where we added the top n words (not tokens) from the Indonesian and regional languages vocabulary without training any new tokenizer. We discovered that with the new approach, we could achieve better fertility scores by adding around 3000 new vocabulary words. Adding more than 3000 words did not significantly improve the fertility score further, but it increased the size of the embedding matrix, leading to longer training times. Note that fertility score refers to the ability of a tokenizer to break down text into meaningful units (tokens) while minimizing the number of unique tokens required.

Incremental Pretraining & Fine-Tuning

For pretraining, our tokenizer processed approximately 8.79 billion tokens. We conducted incremental pretraining, building upon Llama-2-7B-Base, over 3 epochs using LORA. This approach helps prevent catastrophic forgetting and optimizes hardware and cost requirements. The training utilized 8 x A100 40GB GPUs and took approximately 300 hours. We further refined our model through Supervised Fine-Tuning (SFT) on diverse tasks for 5 epochs using LORA. Employing the same GPU configuration, the SFT process took about 36 hours.

Evaluation & Results

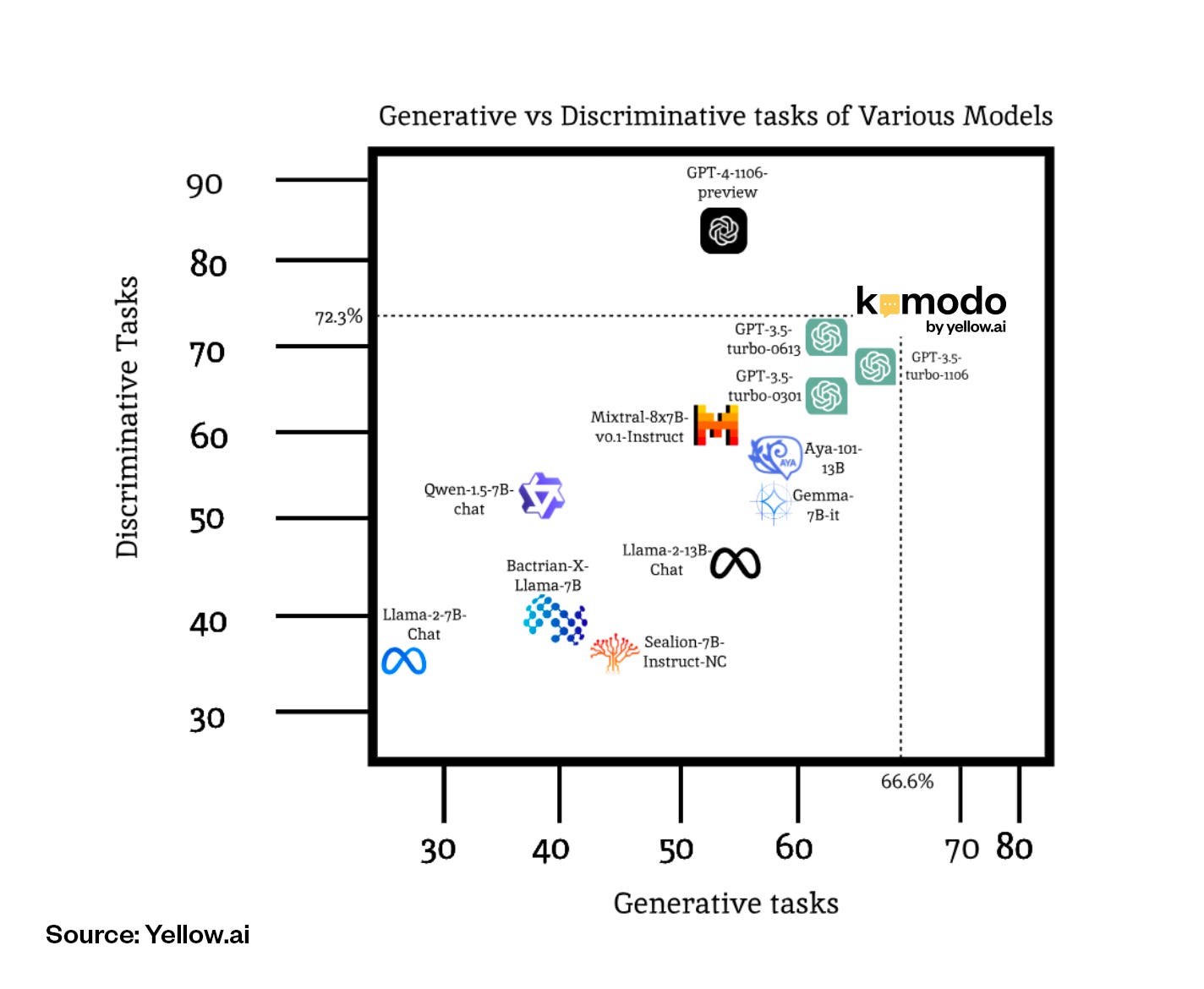

We evaluated Komodo-7B-Instruct against multiple open-source and closed-source massively multilingual models to ensure comprehensive evaluations. As can be seen from the above figure, Komodo-7B-Instruct outperforms all other “strong” open-source models as well as GPT3.5 in both generative and discriminative tasks that are benchmarked by us.

Komodo-7B also offers lower inference cost, by up to 30%, since it requires less amount of tokens compared to Llama-2-7B to complete one sentence, as measured by the fertility score. When comparing the tokenizer performance between Llama-2-7B, our baseline model, and Komodo-7B, the enhanced version, notable distinctions emerge as shown in the above table. Llama-2-7B showcases mean fertility scores of 2.858 for Indonesian, 2.658 for regional languages of Indonesia, and 1.666 for English, with a vocabulary size of 32,000. On the other hand, Komodo-7B exhibits substantial improvements with mean fertility scores of 2.031 for Indonesian, 1.996 for regional languages of Indonesia, and 1.633 for English, coupled with an expanded vocabulary size of 35,008.

The above figure provides a valuable analysis for evaluating the translation capabilities of Komodo-7B-Instruct compared to Google Translate. The visual representation helps us see the languages each platform supports. The heatmap on the right side illustrates Google Translate's proficiency, especially in Javanese, English, Indonesian, and Sundanese. However, it also shows that there are many language spaces left unoccupied.

Conversely, the left side of the heatmap showcases the comprehensive linguistic capabilities of Komodo-7B-Instruct, encompassing a total of 11 Indonesia’s regional languages. This inclusive approach extends the reach of education in Indonesia by enabling direct translation from English to a diverse range of Indonesia’s regional languages including languages that are not supported by many models and translation systems like Acehnese, Balinese, Banjarese, Buginese, Madurese, Minangkabau, and Toba Batak.

The broader coverage of Komodo-7B-Instruct ensures that individuals across various regions in Indonesia, beyond Java, can benefit from education in their native languages. This not only enhances accessibility but also addresses the challenge of language diversity in educational settings. Therefore, Komodo-7B-Instruct stands as a promising solution for bridging educational gaps and encouraging inclusivity in language learning.

In addition to quantitative benchmarking, we conducted qualitative testing by providing various general instructions to the model. Figure 7 displays a sample of these instructions along with the responses from Llama-2-7B-Finetuned and Gemma-7B-Finetuned. It is worth noting that both Llama-2-7B-Finetuned and Gemma-7B-Finetuned were trained on the same data as Komodo-7B-Instruct.

Here, we ask each of the models to perform sentiment analysis where the input sentence is “Aya naon iye teh, gx kkirim2 duit nya?”, which means “What’s actually the problem? my money has not been transferred yet”. Note that the sentence is written in a slang version of Indonesian mixed with Sundanese, one of Indonesia's regional languages. The correct intent for the given sentence is “kendala transaksi”, which means “transaction issue”, while the rest of the intent options are “kembalikan uang”, “kembalikan barang”, and “tidak ada”, which means “money refund”, “item return”, and “none”, respectively.

As can be seen, both Llama-2-7B-Finetuned and Gemma-7B-Finetuned return wrong intents while Komodo-7B-Instruct successfully returns the correct intent.

Future Works

In the future, we plan to enhance the capabilities of Komodo-7B-Instruct by training task-specific large language models (LLMs) on top of the existing Komodo-7B-Base model. While the current model performs well across a wide range of instructions, the task-specific models will be tailored to excel in their respective tasks. For example, there will be a model trained specifically for Question Answering tasks, Intent Classification tasks, and many more. This approach is expected to further improve the model's performance, making it even more suitable for production use.

We also plan to further enhance Komodo-7B-Instruct by creating a conversational variant of the model. This iteration will be trained to comprehend not only single-turn but also multi-turn conversations, enabling it to grasp the context of a dialogue. By enhancing the model's capacity to understand conversational nuances, we anticipate improving its capability to deliver accurate and contextually relevant responses.

Finally, we also aim to train an even larger version of Komodo, potentially with 13B, 33B, or even 70B parameters! This endeavor is driven by the aspiration to enhance the model's quality by having more parameters.

Paper and Model Release

For further insights, we encourage you to explore the research paper on ArXiv via the following link: Link to the research paper.

Additionally, you can access the Komodo-7B-Base model on Hugging Face's model hub: Link to Komodo-7B on Hugging Face.